A Machine Learning Approach to Computational Understanding of Skill Criteria in Surgical Training

This is a project supported by National Science Foundation under grant IIS-0904778.

Good training improves surgical skills and reduces surgery-related deaths, but the need for training cost reduction presents a quandary of compromising training quality. The success of simulation-based surgical education and training will not only shorten the time involving a faculty surgeon in various stages of training (hence reducing the cost), but also improve the quality of training. Reviewing the state-of-the-art research, we face two research challenges: (1)to automatically rate the proficiency of a resident surgeon in simulation-based training, and (2) to associate skill ratings with correction procedures. Aiming at addressing these challenges, in the project we explore a machine-learning-based approach to computational understanding of surgical skills based on temporal inference of visual and motion-capture data from surgical simulation. Our approach employs latent space analysis that exploits intrinsic correlations among multiple data sources of a surgical action. This learning approach is enabled by our simulation and data acquisition design that ensures clinical meaningfulness of the acquired data.

Dr. Mark Smith, MD, Banner Good Samaritan Medical Center, Phoenix.

Dr. Richard Gray, MD, Mayo Hospital Phoenix.

Post-Doctoral Fellow: Peng Zhang (partially supported)

Graduate Students:

(Some are only partially supported by the project.)

Graduated:

Qiang Zhang (Ph.D. 2014), Gazi Isam (Ph.D. 2013), Zheshen Wang (Ph.D., 2011), Hima Bindu Maguluri (MS, 2013), Naveen Kulkarni (MS, 2011).

Current: Lin Chen, Archana Paladugu, Qiongjie Tian, Yilin Wang

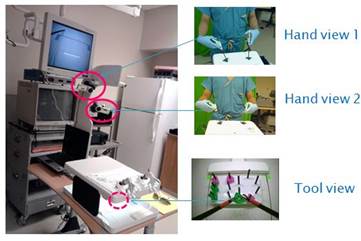

Designing and Building Data Acquisition Units (Completely and deployed):

Resident surgeons are routinely trained on platforms such as the FLS Trainer Box.

Such platforms typically have an on-board camera capturing the tool movements.

In the case of the FLS Trainer Box, this is an analog camera. In order to facilitate

the development of a training system that can provide live feedback to an operator,

it is necessary to capture the hand movements as well (so that any feedback may be

delivered in an intuitive manner). To this end, we designed a special-purpose

acquisition rig. The rig consists of two camcorders mounted on a custom-built frame,

overlooking the hands of the trainee in action from different angles. The

camcorders record digital videos of the trainee's hand movements. The system

includes a laptop computer, which is connected to an on-board camera on the training

platform. A detailed acquisition protocol has been established and tested. The

figure on the left illustrates the system and the video streams captured by the

camcorders and the on-board camera. Using video feeds as the basis for analysis

will release the trainee from being required to wear other 3D motion tracking

gloves that may interfere the subject's performance.

Resident surgeons are routinely trained on platforms such as the FLS Trainer Box.

Such platforms typically have an on-board camera capturing the tool movements.

In the case of the FLS Trainer Box, this is an analog camera. In order to facilitate

the development of a training system that can provide live feedback to an operator,

it is necessary to capture the hand movements as well (so that any feedback may be

delivered in an intuitive manner). To this end, we designed a special-purpose

acquisition rig. The rig consists of two camcorders mounted on a custom-built frame,

overlooking the hands of the trainee in action from different angles. The

camcorders record digital videos of the trainee's hand movements. The system

includes a laptop computer, which is connected to an on-board camera on the training

platform. A detailed acquisition protocol has been established and tested. The

figure on the left illustrates the system and the video streams captured by the

camcorders and the on-board camera. Using video feeds as the basis for analysis

will release the trainee from being required to wear other 3D motion tracking

gloves that may interfere the subject's performance.Acquiring Clinically Meaningful Data from Resident Surgeons (Mostly completely and continuing):

Establishing a clinically-meaningful data stream set of captured surgical actions is critical to subsequent machine-learning-based inference. This is a challenging research task beyond simple data collection, for the following reasons: (i) the captured data must be from well-designed simulations that help assess true surgical skills, to ensure the clinically meaningfulness of the simulation; and (ii) the data need to be annotated to support learning and inference. At a high level, the streams need to have at least a proficiency label. In practice, in order to support feedback with recommendations for correction/improvement, the streams need to have more detailed annotations. Both designing the simulations and defining the annotation schemes need a substantial study and working with domain experts to ensure the usefulness of the data.

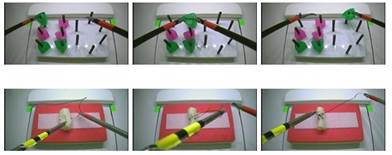

In the project, simulation tasks have been designed for basic practice exercises and complete procedures. Surgical movements such as suturing, knot tying, etc. are composed of a few basic movements herein referred to as basic gestures. These gestures form the building block of most complex surgical procedures. Specifically, we have designed two simulation exercises/tasks for the resident surgeons, namely, Pegboard transferring and Intra-corporeal Suturing. Based on these,we have captured data from more than 25 residents in Banner Health System and Mayo Clinic. The figure on the left illustrates 3 video frames from each of these two tasks.

Developing Analysis Algorithms:

The key task of analysis is temporal inference from multiple data streams and the association of the extracted quantifiable structures and features with surgical-skill-defining criteria based on the annotations of the streams, for addressing the challenges in computational understanding of surgical skills as discussed above. Based on the reasons discussed previously, the temporal inference task will be centered around the video feeds. As in real life, where a supervising surgeon would look at both the tool movement and the hand movement of a resident surgeon in training, we capture at least two videos, one for the tools and one for the hands. In reality, it is not difficult and indeed beneficial to have multiple perspectives for the tools and the hands. Hence we assume that we have two disparate video sources: one 'tool-view' and one 'hand-view', each having multiple perspectives (i.e., video feeds of different viewpoints). The inference task can now be defined as follows: given multiple video feeds and possibly other auxiliary data streams, how to infer the surgical-skill-defining criteria using quantifiable computational features and structures.

Recognizing the complexity of the problem and the difficulty of explicitly identifying the complete set of features/structures defining surgical skills, the task is formulated as a machine-learning problem: given a set of video sequences labeled with different proficiency levels (with synchronized measurement streams from other channels), build a system to learn to rate other unseen videos; if the training videos contain detailed annotations in addition to simple proficiency labels, the system should also suggest correction actions for improving the proficiency level of the test video.

In practice, there are many aspects of this problem that demand computational solutions. For example, in the current acquisition system, the cameras are not centrally controlled (doing this would require us to modify the standard FLS Trainer Box, which is not good solution since that would make the system less portable), and thus we face the task of synchronizing the video streams. Also, the on-board cameras captures interlaced analog video, which is not of high quality and thus feature extraction is hindered. Accordingly, quality enhancement approaches may need to be developed. Furthermore, it has been observed that, even for surgeons who are deemed experts, they may have very different style in executing an operation, resulting in very different videos. We are working on these and other challenges of the problem.

Developing New Training Systems with Realtime Feedback:

The ultimate object of the project would be to develop new training systems that can provide live feedback to a trainee when he/she is performing a practice operation so that correction may be done on the fly in order to improve the performance. At this time, some efforts on this regard have been invested, based on the partial results from the analysis stage. The following links illustrate demos from two pieces of software: the first one monitors the action of the operator in terms of the motion and the dots and their color remind the operator if the movement patterns are within reasonable range; the second one expands to include indicators for motion jitteriness and counts of dropped objects. These preliminary systems, while seemingly simple and straightforward, have won applauses from the resident surgeons since they can now know in real-time how they perform and thus take action to improve.

Motion Monitoring Demo Motion and Drop Monitoring Demo

(Click the pictures to play video)

Q. Zhang and B. Li, Relative Hidden Markov Models for Video-based Evaluation of Motion Skills in Surgical Training, IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 2014.

L. Chen, P. Zhang, and B. Li, Instructive Video Retrieval Based on Hybrid Ranking and Attribute Learning, ACM Multimedia(MM), November, 2014.

Q. Zhang, L. Chen, B. Li, Max-margin Multi-attribute Learning with Low-rank Constraint, IEEE Transactions on Image Processing, Vol. 23, No. 7, pp. 2866-76, 2014.

Gazi Islam, Kanav Kahol, Baoxin Li, Development of a Computer Vision Application for Surgical Skill Training and Assessment, in Bio-Informatic Systems, Processing and Applications, J. I. Agbinya, E. Custovic, and J. Whittington (Ed.), River Publisher, 2013, ISBN: 978-8793102187.

Gazi Islam, Kanav Kahol, Baoxin Li, An Affordable Real-time Assessment System for Surgical Skill Training, ACM International Conference on Intelligent User Interfaces (IUI) 2013.

Qiang Zhang and Baoxin Li, Relative Hidden Markov Models for Evaluating Motion Skills, IEEE International Conference Computer Vision and Pattern Recognition (CVPR), 2013.

Lin Chen, Qiang Zhang, Qiongjie Tian and Baoxin Li, Learning Skill-Defining Latent Space in Video-based Analysis of Surgical Expertise A Multi-Stream Fusion Approach, NextMed / MMVR 2013 (Medicine Meets Virtual Reality) 2013.

Qiongjie Tian, Lin Chen and Qiang Zhang, Baoxin Li, Enhancing Fundamentals of Laparoscopic Surgery Trainer Box via Designing A Multi-Sensor Feedback System, NextMed / MMVR 2013 (Medicine Meets Virtual Reality) 2013.

Qiang Zhang, Lin Chen, Qiongjie Tian and Baoxin Li, "Video-based Analysis of Motion Skills in Simulation-based Surgical Training ", IS&T/SPIE Electronic Imaging, pp. 86670A-86670A, 2013.

Gazi Islam, Kanav Kahol, Baoxin Li, Developing a Real-time Low-cost System for Surgical Skill Training, IEEE International Conference on Multimedia and Expo (ICME), 2013.

Yilin Wang and Baoxin Li, "Building Video-synthesis Tools for Simulation-based Quality Evaluation in 3D Viewing, 7th International Workshop on Video Processing and Quality Metrics, January, 2013.

Gray, R.J., Kahol, K., Islam, G., Smith, M., Chapital, A., Ferrara, J., High-Fidelity, Low-Cost, Automated Method to Assess Laparoscopic Skills Objectively, Journal of Surgical Education, 2012. 69(3): p. 335-339.

Qiang Zhang and Baoxin Li, "Video-based Motion Expertise Analysis in Simulation-based Surgical Training", ACM MM Workshop on Medical Multimedia Analysis and Retrieval (MMAR), November, 2011.

Z. Wang, M. Kumar, J. Luo, B. Li, "Sequence-Kernel Based Sparse Representation for Amateur Video Summarization", ACM MM Joint Workshop on Modeling and Representing Events (J-MRE), November, 2011

Islam, G. and K. Kahol, Application of Computer Vision Algorithm in Surgical Skill Assessment, in IEEE 6th International Conference on Broadband Communications & Biomedical Applications (IB2COM), 2011: Melbourne, Australia.

Gazi Islam, Kanav Kahol, John Ferrara, Richard Gray, Development of Computer Vision Algorithm for Surgical Skill Assessment,2nd International ICST Conference on Ambient Media and Systems,March, 2011.

Islam, G. and K. Kahol, Development of Computer Vision Algorithm for Surgical Skill Assessment, in The 2nd International ICST Conference on Ambient Media and Systems, 2011: Porto, Portugal.

Islam, G., K. Kahol, and e. al, Understanding specialized human motion through gesture analysis: Comparisons of expert and novice anesthesiologists performing direct laryngoscopy using motion capture technology in International Anesthesia Research Society Meeting, 2011: Vancouver, Canada.

Zheshen Wang and Baoxin Li, Synchronizing Disparate Video Streams from Laparoscopic Operations in Simulation-based Surgical Training, IEEE International Workshop on Artificial Intelligence and Pattern Recognition (AIPR), October, 2010.

Qiang Zhang and Baoxin Li, Towards Computational Understanding of Skill Levels in Simulation-based Surgical Training via Automatic Video Analysis, International Symposium on Visual Computing (ISVC), December, 2010.

Kahol, K., M. Smith, and J. Ferrara. Quantitative Benchmarking of Technical Skill Sets as a Credentialing Strategy. in American College of Surgeons 96th Annual Clinical Congress. 2010. Washington, DC, USA.